What is the best way to test understanding? (Covid series: 10 of 15)

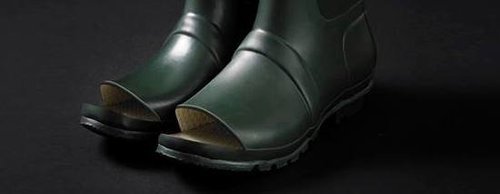

All too often we find that well intentioned training programmes fail to deliver as expected because a critical part of the package has been left out. The image of the wellington boots is a witty April Fool from Hunter Wellies, showing their Open Toe gumboot with unique ‘toe breathability technology’. I’ve chosen the image because it illustrates the potential problem if a key part of a design is left out. The product fails to do the intended task. In terms of training programmes, the missing piece I’m referring to is the effectiveness of the content: the testing of understanding.

So what is the best way to test understanding?

Formal exams are recognised and acknowledged as being important for their rigour, but academic competence, intelligence and understanding are not completely straightforward things to measure. There is no single method that fully captures an individual’s ability, but society accepts that we need some formal systems.

Intuitively we understand that we need divisions between ability levels. Without these divisions learners risk being in learning environments that are unsuited to them and this will be counter-productive. We understand that the only fair, impartial and scaleable method for division is by examination. The reason that exams can be good at this function is because they are not vague. Exams should have clear, measurable guidelines against which outcomes are possible.

However, it is a simple and sad truth that exam guidelines are not always clear, and therefore measurable, and this can lead to an all too familiar scenario in which students who expected to be tested on their knowledge and understanding are in fact being tested on their test-taking ability. For instance, the common practice of repeating past papers trains candidates to master the tests rather than the subject.

Subjective or qualitative papers with essay questions are not as easy to measure as quantitative papers such as maths, and different examiners will often mark the same paper by the same candidate differently. Equally, some exams simply require students to cram information for the test with the somewhat inevitable result that the information is almost immediately forgotten after the test.

Formal exams are valid and useful if guidelines are clear and questions seek to extract understanding rather than simple recall of facts. But other techniques such as informal testing by multiple-choice tests can also be useful and valid as they inherently test knowledge.

Multiple-choice tests can provide an effective, efficient and highly scalable way to assess learning, they can be used across a range of levels from basic recall of facts to analysis and evaluation of a topic and they are less susceptible to guessing than true/false questions, making them a more reliable means of assessment. The reliability is enhanced when the number of questions focused on a single learning objective is increased and the scoring associated with multiple-choice tests removes the problems of inconsistency in scoring that can be found with essay questions.

Students can typically answer a multiple-choice question much more quickly than an essay question and this means that tests based on multiple-choice can focus on a relatively broad representation of course material, thus increasing the validity of the assessment.

The construction of good multiple-choice questions is central to taking advantage of the potential strengths on offer.

Some examples of things that multiple-choice questions should contain:

- relevant material only. Irrelevant material can decrease the reliability and the validity of the test scores (Haldyna and Downing 1989)

- meaningful questions. For example, it is best to avoid questions such as: “Which of the following is true?” and instead ask, “Approximately how many….” positives. Questions should only be constructed as negative when significant learning outcomes require it. Students often have difficulty understanding items with negative phrasing (Rodriguez 1997). IE, avoid questions such as ‘Which of the these statements is not correct:’

- alternatives which are all plausible. Incorrect alternatives are called distractors and these should be credible enough to be selected by candidates who have not completed their learning or have failed to understand the content fully. Alternatives that are implausible don’t serve as functional distractors and shouldn’t be used. Common student errors provide the best source of distractors.

- alternatives which are free from clues about which response is correct. It doesn’t take much for test-takers to be alert to inadvertent clues to the correct answer such as differences in grammar or length.

- meaningful answers. It is best to avoid answer options such as “all of the above” or “none of the above”. When “all of the above” is used as an answer, test-takers who can identify more than one alternative as correct can select the correct answer even if unsure about other alternatives. Equally, when “none of the above” is used, test-takers who can eliminate a single option can thereby eliminate other options. In either case, candidates can use partial knowledge to arrive at a correct answer.

-

Designing problems that require multi-logical thinking (“thinking that requires knowledge of more than one fact to logically and systematically apply concepts to a ...problem” (Morrison and Free, 2001)) means that multiple-choice questions can be written to test higher level thinking.

Testing understanding and options to achieve scale

If we can accept that assessing understanding is an important part of learning, then for self-paced distance learning courses, online testing must be highly appropriate.

References

Haladyna, T.M., and Downing, S.M. (1989) A taxonomy of multiple-choice item-writing rules, Applied Measurement in Education Morrison S, and Free K.W. (Jan 2001) Writing multiple-choice test items that promote and measure critical thinking, Journal of Nursing Education Rodriguez, M. C. (April 1997) The art and science of item writing: A meta-analysis of multiple-choice format effects

This post is an update of the original from Coracle posted on 3rd April 2014.